“Breaking Down” the Ranking of Music Albums

Earlier this week, I was talking with a coworker about my veneration for the sad-sack depressive genius of Ben Gibbard, and she asked me to rank all of the Death Cab for Cutie (DCFC) albums. I know the discography quite well, so I quickly gave a ranking based on my general feelings about the albums. Though I felt rather confident about my answer, I still wondered if there was a way to approach the question a little more quantitatively. I didn’t have any truly quantitative readouts available (ie. lifetime play counts), but I wondered if I could more quantitatively approach the qualitative assessments I was giving the albums, by instead assessing the individual songs of the albums, and averaging the score for an album score, which could then be numerically ranked.

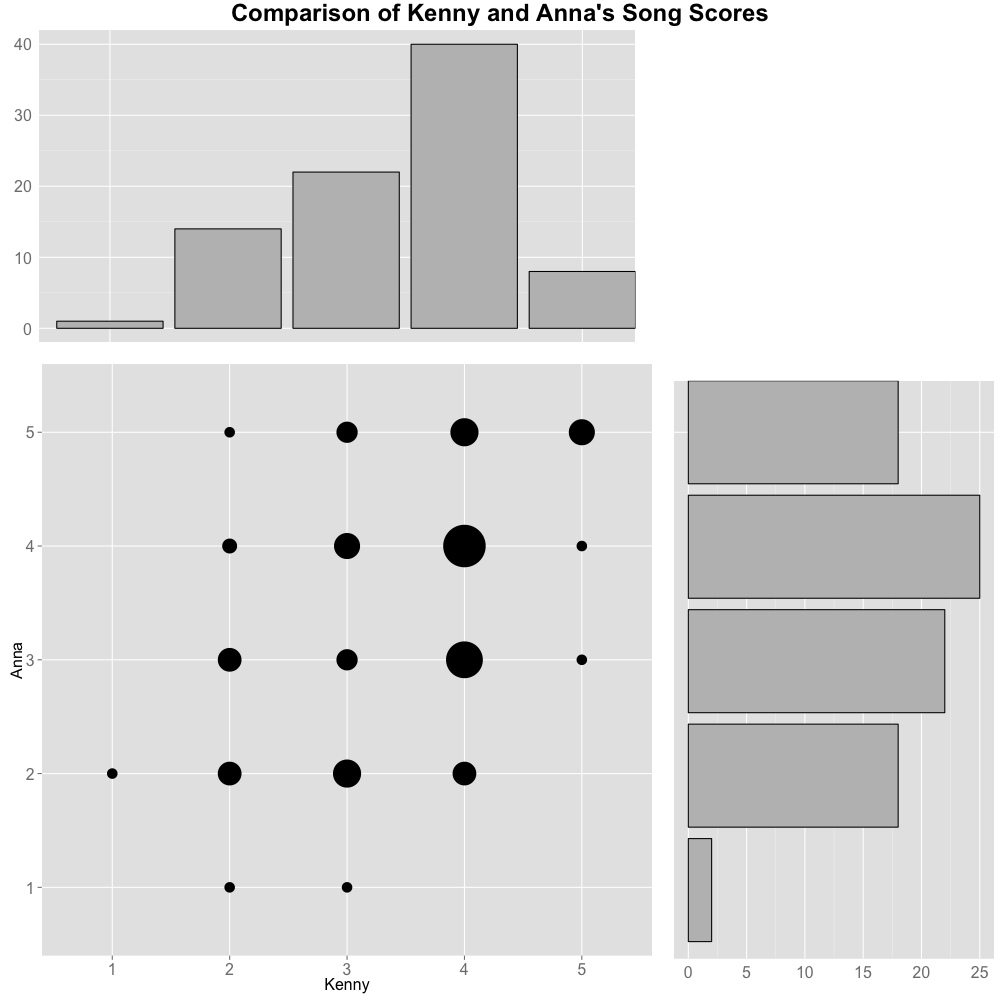

Thus, I went through and scored all 85 songs (8 albums, each with 10 or 11 songs), on a scale of (1: I hate it, 2: I dislike it or am purposefully less familiar (interested) with it, 3: I’m ok with hearing it but don’t feel strongly about it, 4: I like the song, and 5: I love the song / one of my favorites). Since my girlfriend, Anna, is also a big fan of DCFC, I also had her do the same thing. Not surprisingly, Anna and I used the scale a little differently. Anna “loved” and “disliked” many more songs than me, whereas I “liked” far more songs. In general, we had relatively consistent ratings toward songs, though with notable differences, reflected in a ~ 0.42 Spearman correlation. (To note, the area of the circle in the “scatterplot” represents the number of songs Anna and I overlapped in scoring):

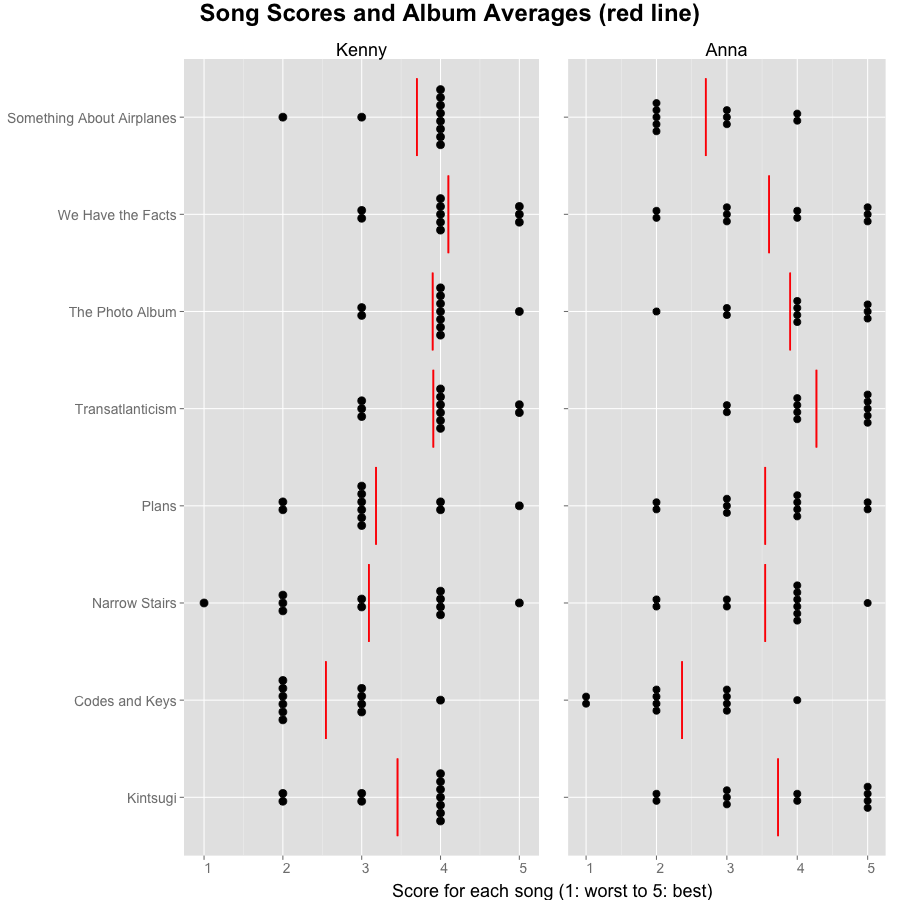

We can look at the ratings separated by album, to get the following graph:

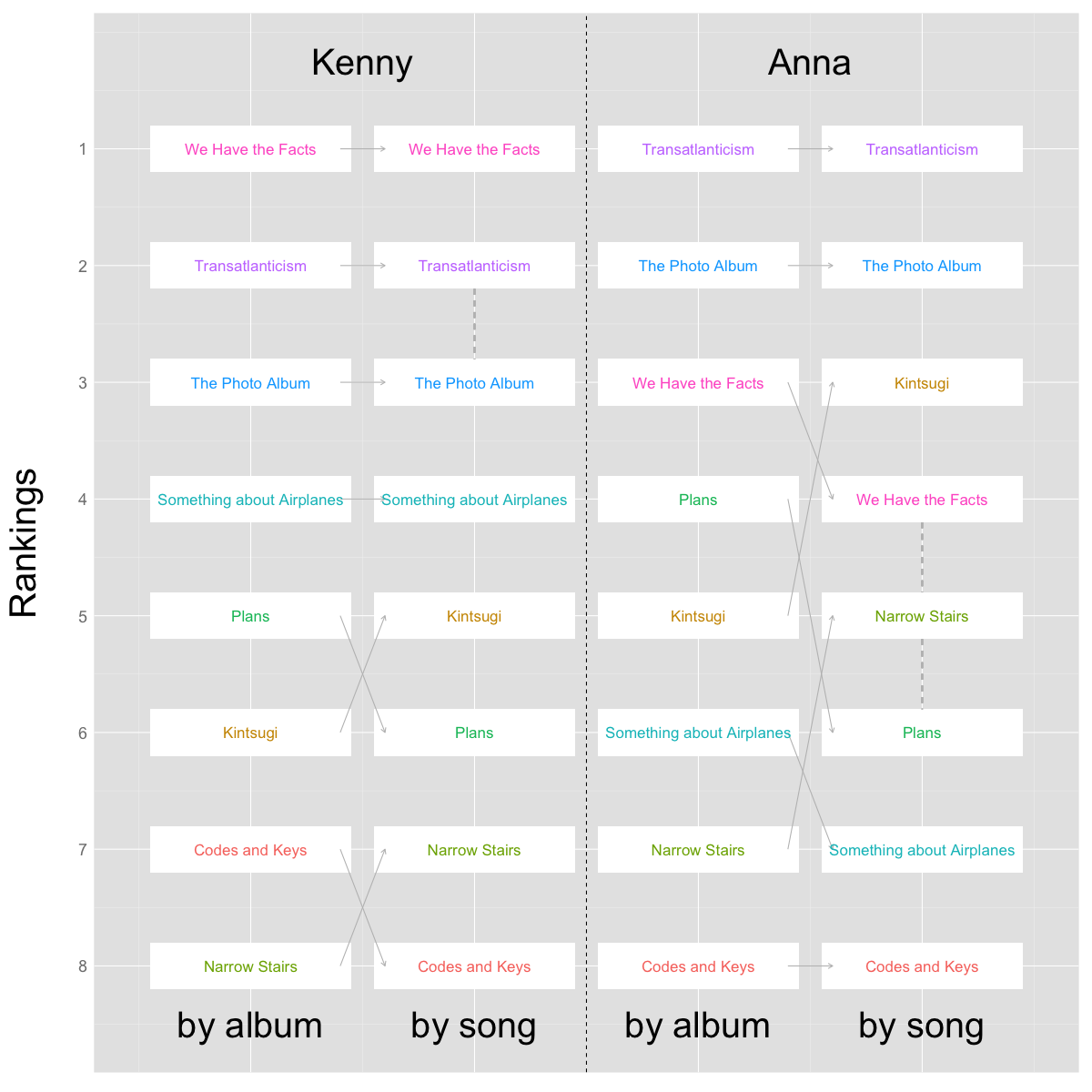

With the average scores in hand, I can now compare the differences in rankings by the two methods:

Overall, the song-scoring method gave similar, but distinct results. My top four albums didn’t change, but I did notice changes at the bottom of my ranking; the songs on “Kintsugi” scored higher than I thought (more than “Plans”), and I — in retrospect, not surprisingly — disliked the songs on “Codes and Keys” far more than any other album. Anna’s results changed even more: though her top two albums didn’t change, she also liked the songs on “Kintsugi” way more than anticipated, and didn’t like “Something about Airplanes” as much as she thought. While I’m a little biased, I prefer the song-scoring method, and I think the ranking I got from it were truer to my opinion than my initial off-the-cuff ranking.

Overall, I think trying the new method was both useful and interesting. I’m not quite sure where the discrepancy in the results of the two ways of ranking lie: it’s possible we’re just not that good in ranking how much we like albums of-the-cuff, or perhaps there’s an intangible factor — such as nostalgic feelings we get towards albums as a whole, separate from the songs themselves — that distinguishes the album and song levels. While the song-scoring method isn’t necessarily superior for everyone, it does provide me with more confidence in understanding how and why I rank the albums where I do. Sure, this exercise does seem a little irrationally excessive, but fandom itself is inherently rather irrational, and I’m fine putting this extra level of effort into analyzing things I really enjoy.

Tools: RStudio, with ggplot2 and gridExtra.